Introducing the world’s largest natural-language artificial intelligence model – the multi-lingual, multimedia-generating Wu Dao.

China’s first super-scale natural-language AI was unveiled in early 2021, and an even larger version of 1.75 trillion parameters was released in June. It’s currently the world's largest natural-language artificial intelligence (AI) model, able to understand and generate coherent text and images based on content and images in Chinese, as well as English and other mainstream languages.

Development of the AI, called Wu Dao, was led by Jie Tang, a professor in Tsinghua’s Department of Computer Science and Technology and vice director of academics at the Beijing Academy of Artificial Intelligence (BAAI).

In essence, Wu Dao is a model that studies datasets of images, video and natural-language text to reproduce statistical patterns that can generate coherent and relevant words and visuals, explains Tang.

It’s thought that Wu Dao and similar tools could eventually help streamline paperwork, and generate video captioning and press releases, among other things. Tang and his team have created start-up Zhipu.AI to further develop these uses.

Wu Dao 2.0’s creators point out that its number of parameters is ten times that of its most high-profile rival GPT-3, which was launched for beta testing in 2020 by San Francisco-based research laboratory OpenAI.

When GPT-3 was released, pundits were quick to explore its ability to come up with linguistically sound poems, memes and songs, although the meaning created by the AI was often nonsensical. Today, GPT-3’s source code is licensed exclusively by Microsoft.

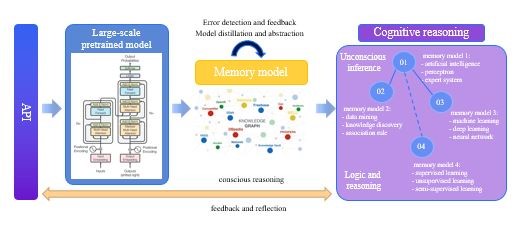

While Wu Dao 2.0 will undoubtedly improve upon this output, the ultimate hope, explains Tang, is that “the next generation of these tools will incorporate pretrained language models, large-scale knowledge graphs, and logical reasoning to produce meaningful content as well as, if not better, than humans”.

In fact, at the same time that Wu Dao 2.0 was released, the Tang’s team also introduced China's first virtual student, who joined the Department of Computer Science and Technology at Tsinghua. Named Hua Zhibing, she is a personification of the Wu Dao model, able to learn from all kinds of data to improve her cognition over time. Using Wu Dao, Zhibing is already able to compose music, reason, react to emotions, answer questions and even code, among other things. Zhibing was co-developed by the Beijing Academy of Artificial Intelligence, Tsinghua AI spin-off Zhipu AI and Microsoft-owned Asian chat bot pioneers, Xiaoice.

Driven by the most up to date Wu Dao dataset, Hua Zhibing, China's first virtual student, joined the Department of Computer Science and Technology at Tsinghua in June 2021.

Making international AI

This isn’t Tang’s first attempt at counteracting the English-language bias of AI. In 2013, he and his team at Tsinghua created XLORE, the first large-scale cross-lingual knowledge graph to provide advanced knowledge linkages between Chinese and English text. The group used machine learning on Wikipedia in Chinese and English, as well as two Chinese-language encyclopedias to balance a discrepancy in language inputs due to the size of Wikipedia in English.

“XLORE was built to address the scarcity of non-English knowledge, the noise in the semantic relations, and the limited coverage of equivalent cross-lingual entities,” explains Tang. XLORE, he says, has also been used to help train Wu Dao.

Before that, Tang’s Tsinghua team had already created a researcher database using a multi-lingual data-mining tool. The database, AMiner, was launched 2006. The mining algorithm collates academic profile and collaboration information in multiple languages, helps to resolve name ambiguity, offers social influence analysis and recommends research partners. So far, it has indexed more than 133 million researchers. The work was first presented in 2008 at annual international data-mining conference KDD’08. In 2020, it won the Association for Computing Machinery’s SIGKDD Test-of-Time Award.

Four subsets of Wu Dao

Tang’s Wu Dao team includes more than one hundred researchers, not only from Tsinghua, but also Peking University, Renmin University of China and the Chinese Academy of Sciences. In March 2021, BAAI unveiled four distinct branches of the AI.

Professor Jie Tang from Tsinghua’s Department of Computer Science and Technology is also Vice Director of Academics at the Beijing Academy of Artificial Intelligence (BAAI). He spoke about key eras in AI innovation at the launch of Wu Dao 1.0 in March 2021.

The first is Wen Hui, a model designed to explore cognitive ability of pretraining models. It can create poems, videos, and images with captions. Its potential uses include simplifying work for e-commerce retailers by creating product descriptions and facilitating product shots. BAAI says Turing tests show the model achieves near-human performance in poetry. Wen Hui was partly trained on the largest pretraining model to-date, featuring 1.75 trillion machine-learning parameters, and features complex reasoning, and text and image search functions.

The aim is to keep developing Wu Dao with more cognitive AI functions to instill it with powerful reasoning and sense-making abilities.

Wen Hui showcases a series of core technical innovations:

FastMoE is the first Mixture-of-Expert (MoE) framework that supports PyTorch and native GPU acceleration, which is the foundation for the realization of a trillion-parameter model. It is easy-to-use, highly flexible, and runs 47 times faster than a naive PyTorch implementation.

GLM (General Language Model) is a general pretraining framework based on autoregressive blank filling, that unifies different pretraining models including BERT and GPT, and achieves state-of-the-art performance on natural language understanding, unconditional generation, and conditional generation at the same time.

The P-tuning 2.0 algorithm closes the performance gap between few-shot learning and fully-supervised learning.

CogView is a novel framework that achieves text-to-image generation via large-scale Transformers pretrained on aligned pairs of texts and images. It significantly outperforms OpenAI's DALL·E model on the MS COCO benchmark.

The Wen Lan model was trained on a Chinese-language dataset consisting of 650 million image-text pairs. It maps sentences and images into the same space and can be used for pure cross-modality searches between images and text. It will eventually be able to create images or videos given text, and caption videos, says Tang. Three applets have already been developed using Wen Lan: MatchSoul, which matches images with humorous, literary, and philosophical text; Soul-Music, which matches images with relevant song lyrics; and AI’s Imaginary World, which matches sentences with relevant high-quality images.

Wen Yuan is the world’s largest Chinese-language AI model and harnesses a suite of efficient, cost-effective and environmentally friendly techniques. Using Wen Yuan's framework, for example, one model with 198 billion parameters only required the processing power of 320 GPUs to run, while GPT-3, which has 175 billion parameters, uses 10,000 GPUs. The team working on Wen Yuan released several Cost-effective Pre-trained Models (CPM-2), which achieve excellent performance in eight tasks in Chinese, including reading comprehension, summarization, and numerical reasoning.

Finally, a tool called Wen Su has been built to predict ultra-long protein structures, and has already been trained on a variety of datasets.

Talks are underway about deploying these tools for e-commerce giant Alibaba, search engine Sogou, and Xinhua News Agency, among others.